Posts tagged community

Quick takes

Popular comments

Recent discussion

For my org, I can imagine using this if it was 2x the size or more, but I can't really think of events I'd run that would be worth the effort to organise for 15 people.

(Maybe like 30% chance I'd use it within 2 years if had 30+ bedrooms, less than 10% chance at the actual size.)

Cool idea though!

I'm confused. Don't you already have a second building? Is that dedicated towards events or towards more guests?

^I'm going to be lazy and tag a few people: @Joey @KarolinaSarek @Ryan Kidd @Leilani Bellamy @Habryka @IrenaK Not expecting a response, but if you are interested, feel free to comment or DM.

I'm posting it now because it's a pity that it wasn't uploaded even though it was a video that gave me a lot of motivation for effective altruism.

I am dealing with repetitive strain injury and don’t foresee being able to really respond to any comments (I’m surprised with myself that I wrote all of this without twitching forearms lol!)

I’m a little hesitant to post this, but I thought I should be vulnerable. ...

I think your current outlook should be the default for people who engage on the forum or agree with the homepage of effectivealtruism.com. I’m glad you got there and that you feel (relatively) comfortable about it. I’m sorry that the process of getting there was so trying. It shouldn't be.

It sounds like the tryingness came from a social expectation to identify as capital ‘E’ capital ‘A’ upon finding resonance with the basic ideas and that identifying that way implied an obligation to support and defend every other EA person and project.

I wish EA weren’t a ...

I worked at OpenAI for three years, from 2021-2024 on the Alignment team, which eventually became the Superalignment team. I worked on scalable oversight, part of the team developing critiques as a technique for using language models to spot mistakes in other language models...

This announcement was written by Toby Tremlett, but don’t worry, I won’t answer the questions for Lewis.

Lewis Bollard, Program Director of Farm Animal Welfare at Open Philanthropy, will be holding an AMA on Wednesday 8th of May. Put all your questions for him on this thread...

What role do you think journalism can play in advancing the cause of farmed animals? Can you think of any promising topics journalists may want to prioritize in the European context in particular, i.e. topics that have the potential to unlock important gains for farmed animals if seriously investigated and publicized?

About a week ago, Spencer Greenberg and I were debating what proportion of Effective Altruists believe enlightenment is real. Since he has a large audience on X, we thought a poll would be a good way to increase our confidence in our predictions

Before I share my commentary...

GPT-5 training is probably starting around now. It seems very unlikely that GPT-5 will cause the end of the world. But it’s hard to be sure. I would guess that GPT-5 is more likely to kill me than an asteroid, a supervolcano, a plane crash or a brain tumor. We can predict...

I absolutely sympathize, and I agree that with the world view / information you have that advocating for a pause makes sense. I would get behind 'regulate AI' or 'regulate AGI', certainly. I think though that pausing is an incorrect strategy which would do more harm than good, so despite being aligned with you in being concerned about AGI dangers, I don't endorse that strategy.

Some part of me thinks this oughtn't matter, since there's approximately ~0% chance of the movement achieving that literal goal. The point is to build an...

I was interviewed in yesterday’s 80,000 hours podcast: Dean Spears on why babies are born small in Uttar Pradesh, and how to save their lives. As I say in the podcast, there’s good evidence that this is a cost-effective way to save lives. Many peer-reviewed articles show...

Thanks so much for your response, that all makes sense!

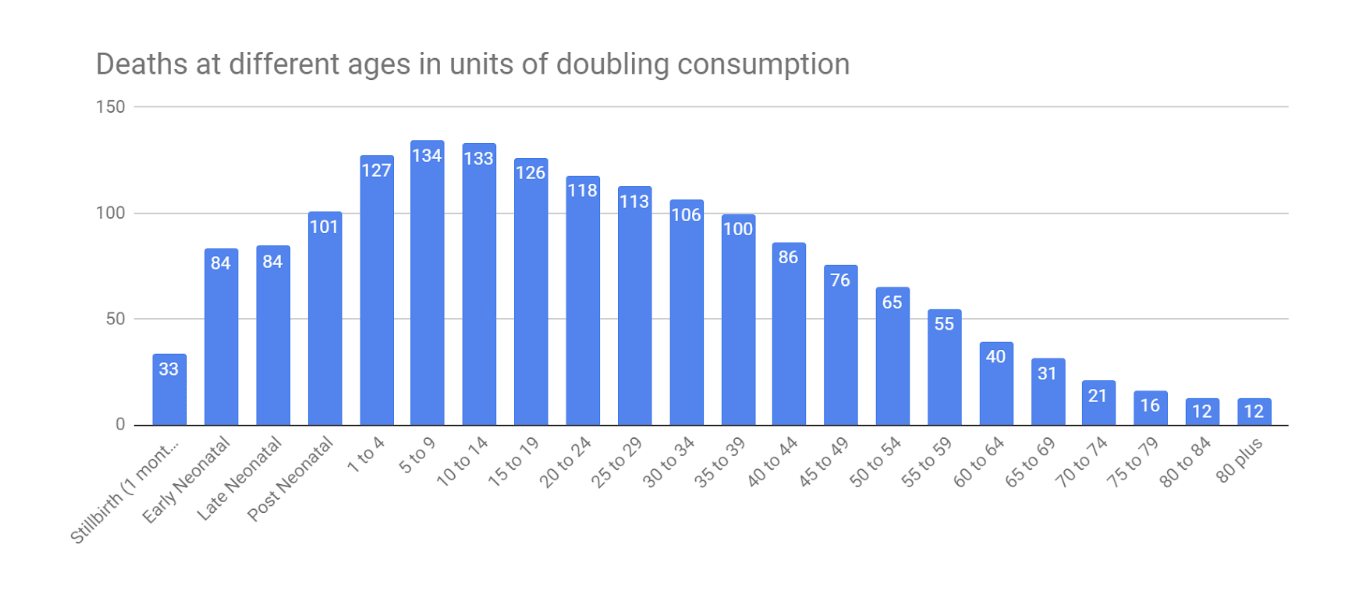

You're understanding question 3 correctly - GiveWell's moral weights look like the following, which is fairly different from valuing every year of life equally.

What is Manifest?

Manifest is a festival for predictions, markets, and mechanisms. It’ll feature serious talks, attendee-run workshops, fun side events, and so many of our favorite people!

Tickets: manifest.is/#tickets

WHEN: June 7-9, 2024, with LessOnline starting May 31 and Summer Camp starting June 3

WHERE: Lighthaven, Berkeley, CA

WHO: Hundreds of folks, interested in forecasting, rationality, EA, economics, journalism, tech and more. If you’re reading this, you’re invited! Special guests include Nate Silver, Scott Alexander, Robin Hanson, Dwarkesh Patel, Cate Hall, and many more.

You can find more details about what we’re planning in our announcement post.

Ticket Deadline

We’re planning to increase standard tickets from $399 to $499 on Tuesday, May 13th so get your tickets ASAP!

Prices for tickets to LessOnline (May 31 - June 2) and Summer Camp (June 3 - June 7) will also...

(EA) Hotel dedicated to events, retreats, and bootcamps in Blackpool, UK?

I want to try and gauge what the demand for this might be. Would you be interested in holding or participating in events in such a place? Or work running them? Examples of hosted events could...